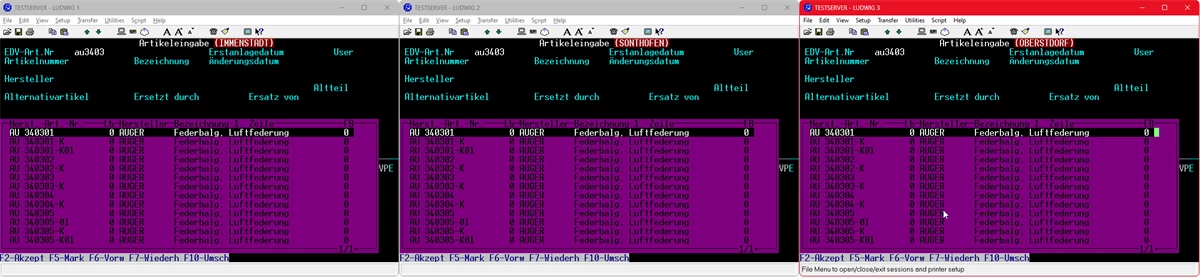

Our Universe application consists of 8 accounts for 8 branches. In all accounts, there is a file for the items. The item file is identical for the master data in all accounts, only the transaction data differs. When writing, changes to the file, master data are distributed across all accounts to keep them consistent.

Although this approach is certainly not optimal, we do not want to change it because the programming is not easy to modify.

I conducted a test, and used: SET.INDEX ITEM TO H:\\ALPHA\\INDEXES\\I_ITEM to set the target path for the index of all 8 item files to the same destination.

During a bulk import into the item file, where all 8 files are updated, switching to a single index file, approximately double the speed is achieved.

My question now is: Is there anything that speaks against using a single index file for multiple data files, as long as all indexed fields are identical for all data files?

greetings

Thomas

------------------------------

Thomas Ludwig

System Builder Developer

Rocket Forum Shared Account

------------------------------