I'm with a system integrator helping a bank replace its current core bank with a new one on IBM i and iCluster is used for replication from DC to DR machines. We have installed the new DC and DR machines and is now in pre-production test phase. My customer's IT team comes up with a test scenario for testing iCluster like this :

1) A few bank tellers enter test transactions they prepare in advance (open new accounts, deposit, withdrawal, etc.) in DC machine while iCluster in running.

2) After 15 minutes, my customer cut the data link between DC and DR machines but all tellers continue enterining test transactions until they are finished (expect to be some 40 minutes more).

3) When telllers finish entering test transactions, DC-DR data link is restored.

4) The customer waits until I confirm to them that the replication of all test transactions from DC are replicated to DR machine is complete before they shut down core bank application in DC and start it in DR machine and check if they see all the test transactions in DR machine.

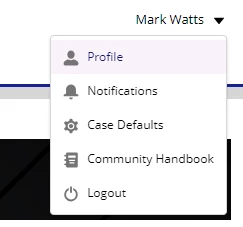

My question is what actions should I take with iCluster from beginning to end of this test (with reference to iCluster Main Menu in DC machine) to handle iCluster to ensure it works as it should? There used to be data link down a few times before during night times and I noticed from DC machine that the DR node status was *FAILED and the replication group status was *UNKNOWN even after the data link was back to work and all I did was end both group and node and restart them again. But since no one worked in the DC machine yet, I do not know if this is what should be done in the test scenario above.

If you need more information before providing me suggestion, let me know.

Thanks in advance.

------------------------------

Satid Singkorapoom

IBM i SME

Rocket Forum Shared Account

------------------------------