Frequently Asked Questions

- What is processor affinity (binding)?

- How to set it up on Solaris?

- How to set it up on Windows/XP?

- How can I do it in ECperf(SPECjAppServer2001) ?

- What to keep in mind when scaling across different number of CPUs?

- How to interpret CPU-scaling data for ECperf (SPECjAppServer2001)?

- How to possibly use the CPU-scalability graphs, and information in real deployment?

- How to use and interpret Borland Enterprise Server's EJBTimers' in scalability runs?

- How to do linear scalability testing of BES using the perf example

1. What is processor affinity (binding)?

Processor affinity means, on a multi-CPU machine, the process(es) run only on dedicated set of CPUs. In other words processes are bound to isolated (subset) of the CPUs.

The feature can be used during performance benchmarking, and also while deploying an application. Following are some reasons (use-cases), that can motivate the use of setting processor affinity:

- For some reason a single Java Virtual Machine (JVM) doesn't scale beyond n number of CPUs. So the total number of CPUs are divided into T/n subsets. Each of the subsets of CPUs can host a dedicated AppServer process. This behavior was also observed in CSIRO benchmark where running multiple client JVMs (ie -client flag of JVM) gave better throughput then just running a single VM on the whole machine. This was specially true for JDK 1.3.x VMs.

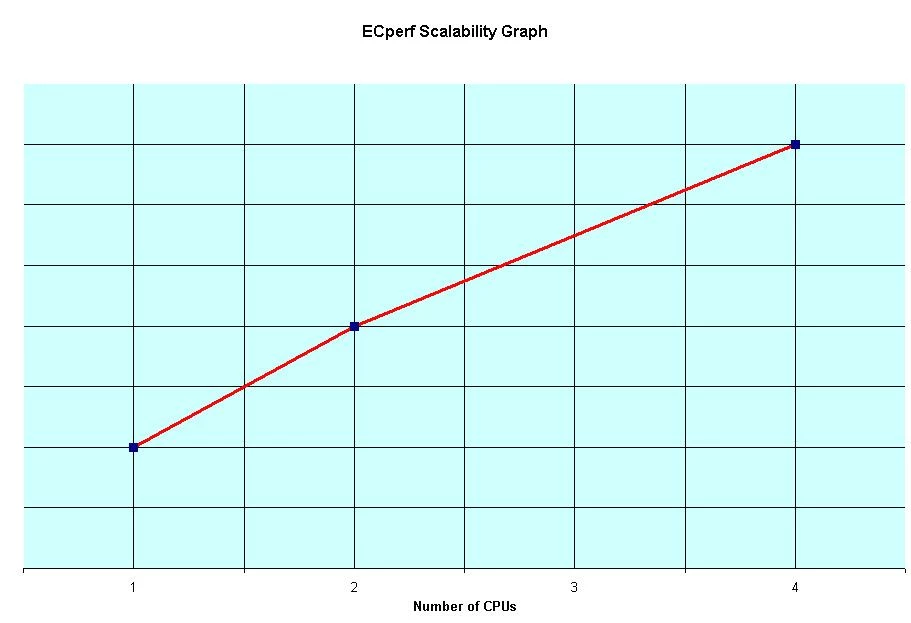

- Linear scalability testing and experimentation. This means to incrementally allocate/enable CPUs that are available to a process and see its scalability (ie throughput improvement). In an ideal product the graph of CPU-vs-Throughput would be a linear relationship (ie a straight line). The graph depicts whether the product will be able to use all the resources and power of the machine should the need occur. This can also give very useful hints about the number of AppServer partition processes that should be started on a given machine. In other words, if a single partition can scale efficiently to use all the resources, you can just run an instance on the whole machine.

- To find out any possible patterns (formulae) that can be generic. For example for a given OS, and CPU architecture type you may need to run one partition process for every 4 CPUs.

- Interrupts to be handled by dedicated processor(s). It is observed in some cases that allocating a dedicated CPU for handling network (TCP/IP) interrupts makes the CPU usage of other processes smoother, and efficient. On Solaris, interrupts can be disabled for a given set of CPUs by using `psrset ` command discussed below.

- Availability of the application increases as the garbage collection doesn't block the only instance.

- Sometimes it is observed that running processes on dedicated CPUs avoids underlying operating system's process-scheduling overhead.

- EJBTimers can be turned on, to see if any method stands out as a bottleneck (potentially hindering linear scalability). Please note that EJBTimers have an overhead (of about 15%) of their own, and you should take that into account or do experiments with and without timers.

The following are some example scenarios:

- On an 8 CPU machine two instances of AppServer are running where instance 1 runs on CPUs 0, 1, 2, 3, and instance 2 on CPUs 4,5,6,7

- On a 4 CPU machine AppServer partition runs on 2 dedicated CPUs and OS plus other AppServer components (e.g JDS, Naming service) run on the rest.

- To do linear-scalability testing, on an 8 CPU machine a process is bound to CPU0. The max TPS is found, and then another CPU is bound to the same process. Ideally the TPS for the partition process which now has 2 CPUs should be twice.

2. How to set it up on Solaris?

- Get root access to the machine

- Step 1 : run `mpstat` to see the processor IDs

- Step 2 : Use `psrset` command to create a set of processors. The command will return a set ID

- Step 3 : Use `psrset` command to bind the CPU set to the process

- Step 4 : Use `psrset` command to delete the CPU set.

Example: (assumes you have the root access)

Step 1. bash-2.01$ mpstat

CPU minf mjf xcal intr ithr csw icsw migr smtx srw syscl usr sys wt idl

0 25 0 41 113 101 151 12 9 11 0 50 4 1 1 93

1 26 0 136 182 207 107 11 9 11 0 57 5 1 1 93

2 26 0 38 171 161 131 12 9 11 0 39 4 1 1 93

3 26 0 46 160 149 134 12 10 11 0 51 5 1 1 93

Step 2. psrset -C 0 1 // to create a set, where CPU IDs came from mpstat command above

Step 3. psrset -b 1 2325 // where 1 is the process-set ID, and 2325 is the process (e.g partition) ID.

Step 4. psrset -d 1 // will remove the processor set

Note: Please use `man psrset` to learn more about various other interesting options like disabling interrupts for a particular set of CPUs.

3. How to set it up on Windows( XP or 2K)?

- Open Task Manager

- Righ-click on the partition.exe process and choose "Set Affinity"

- Check the CPU IDs (example CPU0, CPU1) that you want the process to run on.

4. How can I do it in ECperf(now called SPECjAppServer200x)?

The goal here is to:

- Start the benchmark by allocating the smallest number of CPUs, say 1.

- Find the best throughput (txRate in case of ECperf) that can be attained on the configured CPU-set.

- Note the throughput (in appropriate units for example TPS or BOPS) for this set. Note down any other data like thread pool size etc.

- Increase the number of CPUs available to BES, and GoTo step 2.

CPUs go on the X-axis, and normalized txRates on Y-axis // Actual data (note that txRates are normailized/divided by the txRate that we got at 1 CPU)

CPUS metric

1 1 // best txRate on 1 CPU divided by its own value

2 2 // best txRate on 2 CPUs divided by txRate obtained at txRate=1

4 3.5

8 9.5

Notes:

- Graph doesn't contain information about 8 CPU

- Actual throughput numbers are intentionally missing as ECperf/SPECj doesn't allow the use of numbers out of context (legal submission).

5. What to keep in mind when scaling across different number of CPU?

In an ideal environment only one process should be able to scale to handle increased client load by using all the available resources in the machine. However, the following may need to be changed across runs:

- Number of VM instances may need to be increased, and multiple CPU subsets (ie for each of the instances) may need to be created.

- VBJ Thread Pool Size may need to be adjusted.

- May need to turn on EJBTimers (ie check "enable statistics collection" flag in BES Console for EJBContainer).

- maxPoolSize in the Jdbc pool in case it is used. Note that having multiple VMs lead to more database connections as each VM has its own Jdbc pool.

- Note that if you need to run multiple instances you may need to know what kind of load-balancing scheme to use to guarantee all instances are getting used. For example you may need to run with vbroker.naming.propBindOn=1 if naming service based load balancing is used. This is the kind that is used in SPECjAppServer200x apps.

6. How to interpret CPU-scaling data for ECperf (SPECjAppServer2001)?

ECperf (SPECjAppServer) is a very coarse grain benchmark (each txRate increment means at least 8 additional driver threads).

Also note that the data shown in the above figure looks super scalar (linear). The reason/problem is the way measurements are done for ECperf/SPECj. As you know injection rate is a very imprecise measurement at low CPU levels, and is subject to severe truncation.

For example, consider the case where the "ideal" injection rate were 1.49/CPU. Then for 1 CPU, we would get IR=1=truncate(1.4); for 2 CPUs we get IR=2=truncate(2.8); for 4 CPUs we get IR=5=truncate(5.6); and for 8 CPUs we get IR=11=truncate(11.2).

So, we can possibly/theoretically get:

// theoretical data to prove the point that super-linear graph can result in ECperf system.

CPUS metric

1 1

2 2

4 5

8 11

which again looks super scalar. I believe that is what we are seeing, plus the fact that our reading at 4 CPUs was non-optimal. Please note that these measurements were using BES 4.5.1, and there have been several performance enhancements since then.

7. How to possibly use the CPU-scalability graphs, and information in real deployment ?

Usually an attempt should be made to detect the pattern that is obvious, and use that for real deployment.

As an example:

- Assuming, on an 8-CPU machine, the graph is linear from 1 to 4 CPUs, and then becomes flat or non-linear. In this case the suggestion would be to have 2 instances of BES partitions on the machine, and appropriate load balancing strategy to make sure both of them get used equally. For a given CPU/OS type the pattern may be applicable to even larger number of CPUs. For example if the machine has 32 CPUs, you may not need to try CPU-scalibility all the way to 32, but make a good guess.

- If EJBTimers (ie statistics collection) were on during this experiment, then attention must be paid to any hotspot method that seems to stand out as the bottleneck.

8. How to use and interpret Borland Enterprise Server's EJBTimers' in scalability runs?

As mentioned in earlier FAQs, linear scalability runs can be done with EJBTimers of the server turned ON. The following is a real data that was taken using BES 4.5.1 on 1, 2, and 4 CPU configurations. Please note that ideally CPU percentages should be quite similar for each of the measured events across different CPU counts.

The reason is that, while we increase the load, we proportionately increase CPU count as well. This is what we observe in the following experiment, however, there are some differences due to some variations in the TomCat configurations that were tried. These variations are explained below.

This information was used to show that BES has capability of scaling linearly and also that ECperf(tm) is a linear benchmark. The following data, and discussion was submitted to the expert group. The lines below each have a prefix, [1], [2] or [4], indicating whether the run was on a box with 1 CPU, or 2 or 4. Note that otherwise the hardware and configuration were identical for each run (except for TomCat, as discussed below).

Note: Please note that some of the timers are renamed in BES 5.x releases; after the introduction of local interfaces, there was a need to identify two dispatch paths. However their use, and analysis is equally applicable to BES 5.x, and hence discussed here. Also, ECperf 1.0 was used in this experiment, and there have been some minor changes in method names etc since then.

Action Total (ms) Count T/C (ms) Percent

------ ---------- ----- -------- -------

[1] Dispatch_POA 62181 16033 3.88 2.38%

[2] Dispatch_POA 57283 20267 2.82 2.2%

[4] Dispatch_POA 171859 41249 4.16 1.86%

[1] Dispatch_Home 158624 82141 1.93 6.06%

[2] Dispatch_Home 163365 125938 1.29 6.28%

[4] Dispatch_Home 528250 260885 2.02 5.74%

[1] Dispatch_Remote 162216 297736 1.83 6.20%

[2] Dispatch_Remote 179541 413560 0.43 6.9%

[4] Dispatch_Remote 574053 904800 0.63 6.24%

[1] Dispatch_Bean 766279 642263 1.19 29.28%

[2] Dispatch_Bean 606457 906561 0.66 23.31%

[4] Dispatch_Bean 2666198 1976858 1.34 28.98%

[1] EntityHome 221448 277261 0.80 8.46%

[2] EntityHome 212445 384976 0.55 8.16%

[4] EntityHome 753717 846196 0.89 8.19%

[1] ResourceCommit 177083 10153 17.44 6.77%

[2] ResourceCommit 220344 14666 15.02 8.47%

[4] ResourceCommit 785920 28428 27.64 8.54%

[1] CMP 63711 266361 0.24 2.43%

[2] CMP 69639 374205 0.18 2.67%

[4] CMP 259927 807816 0.32 2.82%

[1] CMP_Update 129691 32929 3.94 4.96%

[2] CMP_Update 137065 46507 2.94 5.26%

[4] CMP_Update 377318 100283 3.76 4.1%

[1] CMP_Query 619500 76705 8.08 23.67%

[2] CMP_Query 612135 107039 5.71 23.53%

[4] CMP_Query 1686188 231964 7.26 18.33%

Total (ms) Count T/C (ms) Percent Action

---------- ----- -------- ------- ------

[1] 146418 23705 6.18 5.6% SupplierCmp:SupplierEnt.getPartSpec()

[2] 114240 32439 3.52 4.39% SupplierCmp:SupplierEnt.getPartSpec()

[4] 447482 73326 6.1 4.86% SupplierCmp:SupplierEnt.getPartSpec()

[1] 55321 1051 52.64 2.11% WorkOrderCmp:WorkOrderEnt.process()

[2] 54091 1511 35.79 2.07% WorkOrderCmp:WorkOrderEnt.process()

[4] 216112 2999 72.06 2.34% WorkOrderCmp:WorkOrderEnt.process()

[1] 19864 2241 8.86 0.76% OrderCmp:OrderEnt.getStatus()

[2] 14554 2998 4.85 0.55% OrderCmp:OrderEnt.getStatus()

[4] 49503 4966 9.96 0.53% OrderCmp:OrderEnt.getStatus()

[1] 291484 809 360.3 11.14% BuyerSes:BuyerSes.purchase()

[2] 108593 1164 93.29 4.17% BuyerSes:BuyerSes.purchase()

[4] 938556 2502 375.12 10.2% BuyerSes:BuyerSes.purchase()

[1] 26192 1157 22.64 1.0% RuleBmp:EntityBean.ejbLoad()

[2] 22818 1636 13.94 0.87% RuleBmp:EntityBean.ejbLoad()

[4] 58033 3230 17.96 0.63% RuleBmp:EntityBean.ejbLoad()

[1] 27744 10153 2.73 1.06% ComponentCmp:ComponentEnt.getQtyRequired()

[2] 31904 14639 2.17 1.22% ComponentCmp:ComponentEnt.getQtyRequired()

[4] 106251 32658 3.25 1.15% ComponentCmp:ComponentEnt.getQtyRequired()

[1] 30424 1157 26.30 1.16% CustomerCmp:CustomerEnt.getPercentDiscount()

[2] 33224 1636 20.3 1.27% CustomerCmp:CustomerEnt.getPercentDiscount()

[4] 80213 3230 24.83 0.87% CustomerCmp:CustomerEnt.getPercentDiscount()

In short, these numbers indicate that ECperf scales extremely well on BES on vertically scaled hardware. That is, there is no particular operation or overhead which is increasing linearly with the hardware allocation. In fact, all operations seem to have a constant overhead for each of the three runs (except TomCat).

Explanation

There are two tables above. The first gives EJB Container level statistics. In the container, we measure the time spent in various parts of the container, and provide the following information (please see BES docs for complete details on EJBTimers):

- Action: What the particular action was.

- Total (ms): Total number of milliseconds spent on the action.

- Count: Number of times the action was performed.

- T/C (ms): Total divided by Count: average time for each action.

- Percent: Percentage of the total time spent on that action.

In both tables, we omit measurements that account for less than 0.5% of CPU time. In the first table, we list the following Container actions:

- Dispatch_POA: Time spent receiving the TCP request, and sending the reply.

- Dispatch_Home: Time spent in the container dispatching methods to EJBHome objects.

- Dispatch_Remote: Time spent in the container dispatching methods to EJBRemote objects.

- Dispatch_Bean: Time spent in the bean methods. Note that this is broken down in detail on a method-by-method basis.

- EntityHome: Time spent in the container implementing EJBHome-related operations specific to Entity beans.

- ResourceCommit: Time spent specifically in committing the work done on the resource (that is, in committing to Oracle).

- CMP: Time spent in the CMP engine (excluding other CMP tasks listed explicitly).

- CMP_Update: Time spent in the CMP engine doing SQL updates.

- CMP_Query: Time spent in the CMP engine doing SQL queries.

The second table provides a method-by-method breakdown, again for methods which account for 0.5% of CPU time (in any run) or more.

Analysis

The three runs are surprisingly similar in terms of CPU allocation. The only significant different between the three runs is in the one method:

[1] 291484 809 360.3 11.14% BuyerSes:BuyerSes.purchase()

[2] 108593 1164 93.29 4.17% BuyerSes:BuyerSes.purchase()

[4] 938556 2502 375.12 10.2% BuyerSes:BuyerSes.purchase()

This method calls out to the TomCat process. This anomaly turned out to be TomCat configuration issues, and in the above runs, we used different configurations to try to resolve the problem. In the 1 CPU and 4 CPU runs, we used a TomCat process running on a remote box. In the 2 CPU run, we used a TomCat process running on the AppServer box. This explains the difference in performance, since the local TomCat responded significantly faster than the remote TomCat, due to loopback TCP calls. Otherwise, things look completely linear.

9. How to do linear scalability testing of BES using the perf example?

Useful information about the linear scalability behavior on a given machine configuration can be obtained using the BES example that can be found at:

<bes-install>/examples/ejb/perf

This examples in fact is a very fine grain benchmark, and gives more accurate performance information and machine characteristics than what ECperf/SPECj can provide. Please see the truncation issue discussed above. Since perf benchmark offers quite a few options, it is recommended to read the accompanying doc to try the tuning that best matches your application characteristics. Here are some guidelines:

- Set the read percentage (using read_perc) to mimic your application.

- Choose the right block size, and keep it constant.

- Vary the number of client (ie Thrasher threads) to find out the best throughput for the given set of CPUs.

- To simulate real clients you may want to have some "think" times. This can be achieved byusing -sleep_min, and sleep_max flags of Thrasher. Note that if you are using these flags, you may need to scale to larger number of threads in Thrasher as not all of them would be active at the same time.

Note: Please keep in mind that the shipping version of perf doesn't currently have ejb-links between the session and entity beans, and may inadvertently lead to RPC's if not properly deployed. For example if you decide to use naming service based clustering, such that only one of the partitions is hosting the naming service, then in this situation you may have a situation where the SessionBean invokes an EntityBean in a different partition (undesirable situation). For now the workaround is to create ejb-links using BES DDEditor if you decide to use this configuration.

#Security

#performancetuning

#BES

#HowTo-BestPractice

#VisiBroker

#cpu

#AppServer